When Eric Booth’s 90-year-old grandmother came to visit, her hearing had deteriorated so badly that even with hearing aids, she had a difficult time understanding what people were saying. He watched her lean in closely to speakers and try to read their lips, struggling to comprehend what was said. When more than one speaker was involved, she’d often lose track of the conversation.

Then Eric – a cloud senior business development manager at Micron – had an idea. His grandmother had a smart phone, so why not let it “listen” for her? He opened her notes application, pushed the microphone button, and showed her how it transcribed his speech into text on the screen.

“She was just so excited, grinning from ear to ear. Now she was able to participate in conversations where, in the past, she couldn’t,” he says. “This is how this technology can really improve the quality of life for people with speech, language and hearing disorders.”

The technology to transcribe speech to text may seem simple and easy to overlook but it is a complex process that has taken decades to advance to the point it is today.

A fast-advancing technology

It’s been a long time since the first speech-recognition (SR) device, Audrey, debuted. Bell Laboratories introduced Audrey in 1962. This six-foot-high computer comprehended only single-digit numbers. Instead of producing text, it flashed lights corresponding to the digit spoken — nine blinks of the light for the word “nine,” for instance.

Even a few years ago, SR technology was not very user-friendly: often inaccurate, unable to filter out even the slightest ambient sounds, slow to transcribe. SR had a long way to go to be of real use to anyone.

Today, SR is enabled by advances in AI, virtual assistant technology, 5G cellular technology, and memory, storage and computer processing. This enables us to do many things we couldn’t before: communicate in languages we’ve never spoken, transcribe long recordings almost instantly, order – merely by speaking words into the air – almost anything we want for delivery to our front door.

Now, generative AI is elevating this technology further. While speech recognition takes the audio and parses it into text, generative AI processes the text to actually understand what it means. Not just, What are the words? but, What do the words mean? Are the words asking a question? If so, what is the answer?

This type of machine learning can create text, video, images, computer code and other content, based on user prompts or dialogue. Generative AI layered on speech recognition takes learning to a new level, opening up possibilities for this technology to further help people with speech or hearing disabilities.

While nimble speech recognition ingests language that may not follow normal speech patterns, generative AI and natural language processing (NLP) make sense of it and turn it into relevant recommendations. This process enables wholistic, highly personalized speech therapies.

Eric’s own daughter took part in speech therapy, so he knows first-hand about the time and effort required. These experiences inspired him to enroll in a doctoral program at Boise State University in Idaho to research ways that technology can help children with speech disabilities.

“In speech therapy, we used to think that the therapist would give the student content to read and then a tool would score how well they did in pronunciation and enunciation,” explains Eric. “But with generative AI, there is promise of a tool that could handle the whole process. It excels in identifying patterns, so it can tell if a student is, for instance, consistently mispronouncing their Os.”

Large language models

Until recently, speech recognition meant you needed a big server with lots of memory, and any data collected had to go to the cloud. Now, speech recognition is built into your phone. The compute has gotten faster, the memory has gotten faster, and a former data center process is now on your phone.

Soon generative AI processes will also be on your phone or other endpoint device. Because the training process for AI models is not just about making more complex models, but also simplifying them to work in endpoint devices such your phone or PC. As these large language models grow, it’s not possible to do the training outside of a cloud environment. But, once you have it trained, and then simplified, it can move to the endpoint device.

In the last few years, there’s been tremendous advancement on large language models:

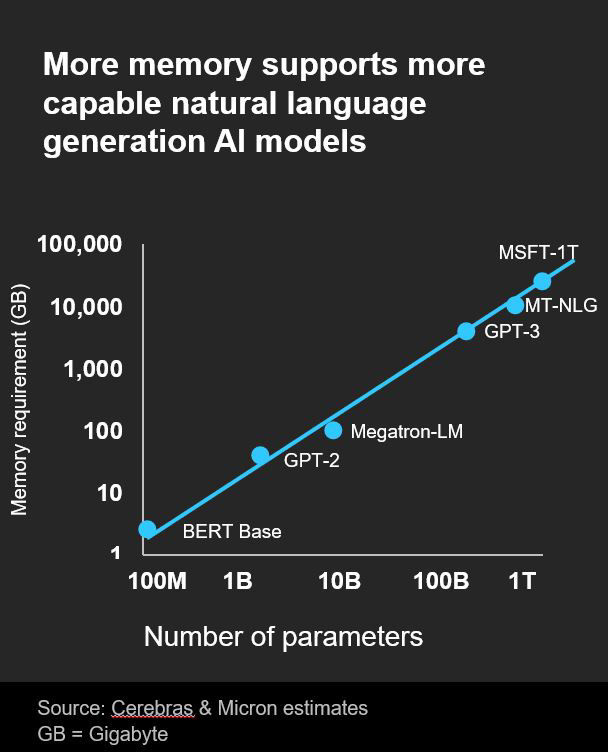

“These models are key to generative AI chatbots and advanced search functions,” says Eric. “Large language models have trillions of parameters. A few years ago, a trillion parameters was unimaginable – it couldn’t be processed. Today, a trillion is baseline. Of course, the bigger the model, the more intelligent it is. And this is exactly what’s driving compute and memory demands.”

NLP and generative AI require robust large language model training, and the more parameters, the more memory is required (see figure 1.)

Figure 1

Figure 1

To wrangle these expanding models, transfer learning has become increasingly popular. This is the idea of training a model with a lot of data in a given context, then fine tuning the parameters in that model for another context with a smaller set of data. Say the large set of data is adult speech and the small set is child speech. Transfer learning gives you a model that is accurate for both. If you tried to train a model that was mostly adult speech with a little child speech thrown in, it wouldn’t be nearly as accurate. The combination of training the data on a robust data set in one context, then moving it to another context and fine tuning it with less data is incredibly effective. Eric has documented much of this progress in his paper, Evaluating and Improving Child-Directed Automatic Speech Recognition.

Pre-training a neural network follows the same idea. (The “P” in ChatGPT™ stands for pre-training.) This also is about training a model on one task or dataset, then using those parameters to train another model on a different task or dataset. For ChatGPT, for example, the model has been pre-trained on huge amounts of conversational data from the internet so that it can answer general questions, then it adapts to the current conversation based on the additional context received from the prompts it is given. This gives the model a head-start instead of starting from scratch. Now you have a robust model with a small amount of data.

Today, many AI researchers are focused on generative AI. And that’s not just because of ChatGPT buzz, it’s also because of the profound potential applications in healthcare and other industries.

Helping those who need it most

More than one million children in the U.S. receive professional help in school for speech and language disorders, according to the American Speech-Language-Hearing Association. Overall, eight percent of children experience speech delays or disabilities, Eric says.

“You can’t just go on the open market and buy a speech therapy package of technology for children,” he says. “It doesn’t exist.” The technology is needed, he says, especially for low-income children. Performing assessments on children requires at least two hours, Eric says, but government programs may pay for only 30 minutes.

“A lot of things that take up the therapist’s time could be done by a computer to free up the therapist to do more long-term planning and more focused therapy sessions,” he says.

Children with learning disabilities such as dyslexia can also benefit from having their spoken words transcribed into text, according to the Learning Disabilities Resources Foundation. Like the ingenious use of talk-to-text to help Eric’s grandmother join into conversations, there are many untapped and unimagined use cases for this foundational AI technology.

Powering generative AI and SR

Today, Micron is developing ever-denser, ever-faster memory and storage that increasingly allow language processing to occur right on a person’s phone rather than in the cloud, saving data transmission time.

To power these endpoint devices, Micron’s low-power double data rate 5X (LPDDR5X) memory delivers a balance of power efficiency and performance for a seamless user experience. LPDDR5X delivers the fastest, most advanced mobile memory, with peak speeds at 8.533 gigabits per second (Gbps), up to 33% faster than the previous generation. LPDDR5X’s speed and bandwidth are essential to have powerful generative AI (literally) at hand.

With generative AI, SR is getting closer and closer to working as quickly and as accurately as the human brain. But formidable obstacles still remain to reach that goal, especially for processing the speech of children, accents, and for people with hearing or speech disabilities. Projects like the one Eric is working on can truly change the way generative AI technologies can enrich the lives of all people.

But generative AI is using deep learning to produce text from speech that is increasingly natural — more like human speech. In the past, AI models exceled at ingesting lots of data, identifying patterns and pinpointing root causes from a diagnostic perspective. Today, generative AI “reads” text and uses that data to make contextual inferences from human communications. It is, in essence, “training” itself. To do so, it needs access to and the ability to absorb enormous amounts of data all at once, to draw from vast stores of memory to determine the proper responses. Micron technologies are making these advances possible.

Micron’s high-density DDR5 DRAM modules and multi-terabyte SSD storage enable the speed and capacity required to train generative AI models in the data center. The newly released HBM3E improves performance even further, delivering 50% more capacity at more than 1.2 terabytes per second bandwidth which can reduce training time for multitrillion parameter AI models by more than 30%. As these technologies get faster and more accurate, more people can “speak” and be heard.

“We’re going to see disruptive leaps in performance in generative AI and SR technology in the near future,” Eric predicts. “It’s just really cool for me to see this technology enriching people’s lives.”